How to Write a Compiler #2 - Tokenization

Tokenization is the process of reading source code and splitting it into meaningful symbols that describe the program's content. It's the first step of any compiler.

What is a Token?

A token represents a single indivisible piece of source code:

- Operators -

+,-,>=,>>etc... - Literals - numbers, strings,

null,true,falseetc... - Identifiers - name of variables, functions, classes, etc...

- Keywords - identifiers with special meaning in the language

if/else,while,classetc...

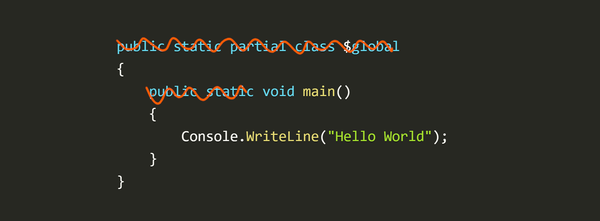

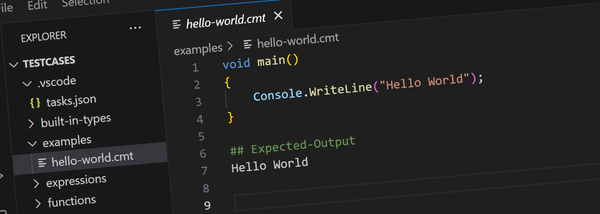

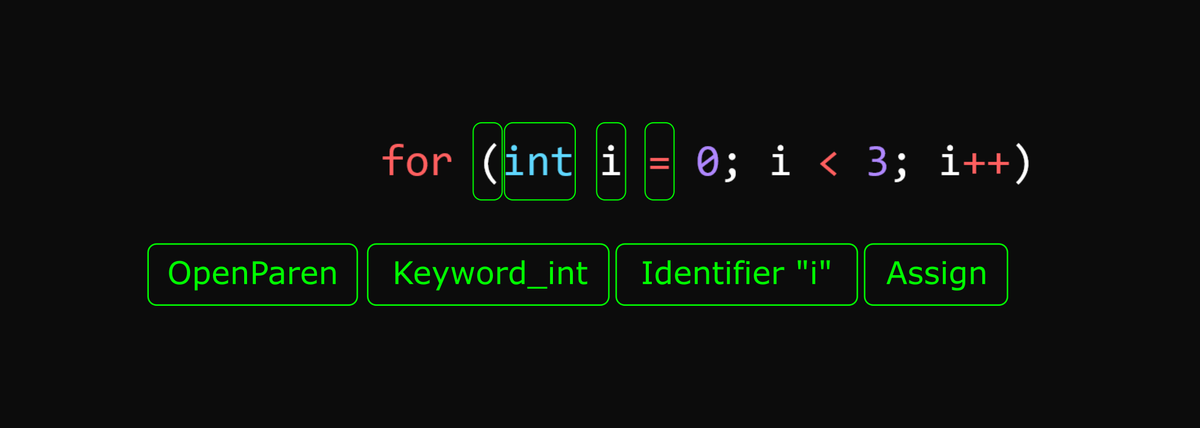

As an example, this piece of code...

// A comment

int myvar = myothervar << 4;...would be tokenized into the following tokens (assuming an enumerated type Token):

Token.Keyword_intToken.Identifier"myvar"Token.AssignmentToken.Identifier"myothervar"Token.ShiftLeftToken.Literal4Token.Semicolon

Note that whitespace and comments aren't included in the token stream.

Tokenization Algorithm

The tokenization process is to skip whitespace and comments and then look at the next character to figure out the type of token and consume as many characters as necessary to produce the token.

- Skip any whitespace

- If the next character is a

/, skip it and...- If the next character is a

*then this is a comment - skip to the end of the comment and start again from step 1. - Else, if the next character is a

/then this is a single line comment - skip to the next line and start again from step 1 - Else, if the next character is a

=, then skip it and returnToken.DivideAssign - Else, return

Token.Divide.

- If the next character is a

- If the next few characters match an operator token (eg:

+,-,<,<=,<<etc...) skip those characters and return the associated token. - If the next character is double or single quote, parse and store a string or character (while handling escape sequences) and return

Token.Literal - If the next character is a digit, skip and store all characters valid for a numeric literal, convert the number to a numeric value and return

Token.Literal. Also consume suffix letters (UandL) for unsigned and long numbers and make sure the literal value reflects those. - If the next character is a valid first character for an identifier, read all characters that are valid identifier characters and store as a string.

- If the resulting string is a named literal (eg:

true,false,null) returnToken.Literal. - If the resulting string matches a keyword, return the

Tokenfor that keyword. - Otherwise, return

Token.Identifier.

- If the resulting string is a named literal (eg:

- Throw a syntax error exception. (see

CodeExceptionbelow)

The Tokenizer Class

The Tokenizer class wraps the above algorithm. The main API to the tokenizer consists of:

Next()method - reads the next token (ie: the above algorithm)Tokenproperty - the current token (see here for a list of tokens)TokenLiteralproperty - the value of the currentToken.LiteraltokenTokenIdentifierproperty - the identifier name of the currentToken.IdentifiertokenTokenPositionproperty - position information for the current token (see below)

The tokenizer also has utility methods that can check for particular expected token and either skip it or throw an error. These are listed here and we'll see how they're used when we talk about the parser.

Related Classes

Besides the tokenizer itself, there are a number of supporting classes - primarily for tracking the position of a tokens in the source file for later error reporting:

The CodeFile Class

The tokenizer reads from the source file via the CodeFile class which:

- stores the filename of the input file

- holds the entire input file as a string

- maintains a current position in the file as an offset from the start of the file (ie: the current character index)

- provides helper methods for skipping whitespace, extracting sub-strings etc...

See here for more about this class.

File Offsets vs Line Number Positions

A file position can be represented in one of two ways:

- a character offset from the start of the file, or

- a line number and a character offset from the start of the line.

Internally, only file offsets are used because they're cheaper to store and easier to work with. When a file position needs to be presented to the user as part of an error message, it's converted to line number/offset.

In order to convert from a file offset to a line number the CodeFile class has an internal instance of the LineNumbers class that uses a list of line offsets and a binary search to quickly convert file offsets to line numbers.

The LinePosition struct encapsulates a line number and a character offset as a single value.

The CodePosition Struct

Every token has an association position represented by a CodePosition struct. It's a struct to save on memory allocations and it stores:

- a reference to the

CodeFile - a file offset

Given a CodePosition we have everything needed to construct useful position information for error messages:

- The name of the file (available via the

CodeFilereference) - The line number and offset from the start of the line (by converting the file offset using the line number mapping stored in the

CodeFile)

Error Handling

Any syntax errors encountered during tokenization and parsing cause the compilation to immediately abort by throwing a CodeException - an exception with an associated CodePosition (see here).

These exceptions are caught by the compiler and reported as errors using the message and position information stored in the CodeException.

(Some compilers will attempt error recovery from syntax errors. This is important if you need to work with the file's abstract syntax tree as the user is editing the file - eg: for code completion. We don't need that functionality so the complexity of error recovery is avoided - at least for now).

Rewinding

There are a couple of instances where the parser needs to look ahead in the token stream.

To support this the tokenizer has a Rewind method which simply resets the input position to a previously saved position and re-reads the next token.

Special Handling for Interpolated Strings

Normally the tokenizer is "context free" in that the next token can always be determined without regard to the previously supplied tokens.

The only time this isn’t true is with interpolated strings as these contain embedded expressions.

(interpolated strings are string literals with embedded expressions such as $"answer {x} + {y} = {x+y}" where x, y and x+y are expressions to be evaluated and substituted into the final string)

You'll notice the tokenizer has methods and tokens related to interpolated strings. I’ll cover these in a later article because it involves the tokenizer, the parser and the runtime.

Tokenized!

There's not much more can be said about the tokenizer - it reads a stream of input characters, strips whitespace and comments and produces tokens with position information.

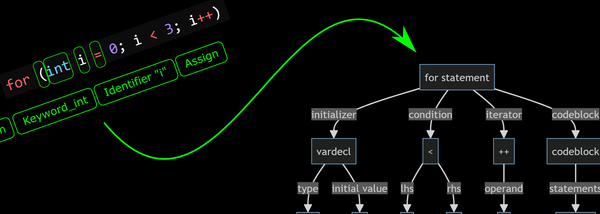

The next step is parsing, but first we need a structure in which to store the results of the parsing - an Abstract Syntax Tree.